Corona, Azure, New Architecture for 2020

2020 started with a lot of time to be spent at home due to the ongoing Corona crisis. I decided to make use of the time and started a project to re-architect Harvee. Having to manage the servers I had provisioned did not feel to be appropriate anymore given the complexity (or rather simplicity) of the application. Also I was thinking about how to further bring down costs. I finally decided to make Harvee as serverless as possible and since I was dealing a lot with Azure for work I decided to explore what Azure had to offer here.

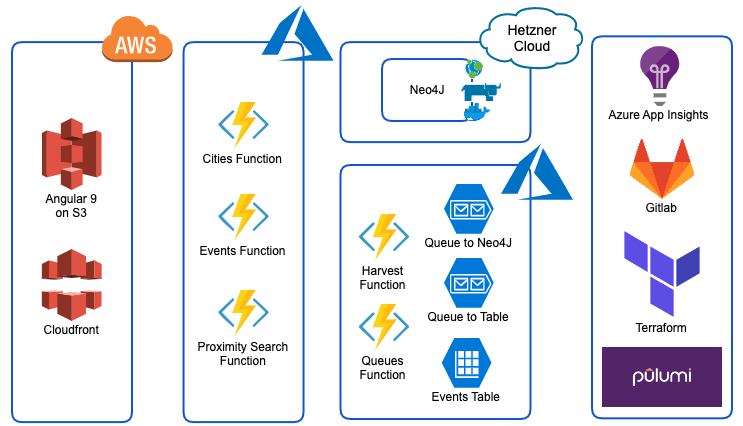

I did not change the frontend really (although upgrading the Angular version was more like a rewrite than an upgrade). Also after having dealt briefly with Azure CDN I decided to stick with Amazon’s S3 and Cloudfront for serving the frontend. The parts behind the frontend changed quite drastically however.

In an attempt to get rid of all the self-hosted webservices which I had written primarily in Python I settled for Azure Functions. At first I tried to write functions again using Python but it felt that Python was not a first class citizen in Azure Functions. I therefore changed my programming language of choice to Typescript. Without going into too much detail I do have to mention however that Azure distinguishes between different models of how functions can be served (application plans). In its simplest and cheapest form the functions are only coming to life when requests are being made. This is also often being referred to as cold starts and as you might have seen Harvee is now experiencing this behaviour quite a bit. I won’t change this at the moment though because of additional costs which I would have to face with a different application plan.

I have to admit that I used to be very proud of the data layer I built for the initial version of Harvee. With Kafka, Apache Flink, ElasticSearch, Neo4J and PostgreSQL it felt like I was using the right tools. In the end though to run them turned out to be quite expensive. In order to not having to manage any data sinks I choose Azure Storage with its Azure Queue Storage and Azure Table Storage functionality to process and store the event data. Again I used Azure Functions to capture and move the data.

Looking at (some would call them DevOps) tooling I first got rid of most of my monitoring tools in order to use what comes with the Cloud providers. For Azure Functions this means to also enable Azure Application Insights. With its very neat integration into Visual Studio Code one can actually do a lot of testing for the functions locally before deploying them. Still being able to look into logfiles and exceptions in Application Insights proved to be very helpful. Based on a recommendation from a colleague at work I wanted to take a look at Pulumi in order to deal with Infrastructure as Code. I am very impressed with its feature set and similarily to all of my other components I can use Typescript for “programming” my infrastructure. There is one part I could not do with Pulumi however and that was issuing SSL certificates from Let’s Encrypt. This piece is being done with Terraform using the Let’s Encrypt/ACME provider.

Another focus area with this little project besides just changing technology was to introduce more security into my architecture. I was not happy at all how I dealt with certificates and secrets in the previous version of Harvee. I am happy to report that I improved this quite a lot with certificates and secrets being stored in Azure KeyVault and access policies set to be very strict in order for my functions to make use of them. Where not otherwise possible I am writing secrets to disks (mainly at development time) in an encrypted format.

All in all I am very happy with the new version of Harvee. It allowed me to learn a lot of new technologies. I will leave Harvee now most probably for a while and work on other projects (Kubernetes, IoT are on the list), which will be not really in the context of Harvee. I might stll report here on the progress.

Btw. as part of my serverless initiative I changed this blog into a serverless version of itself too. Previously I used Wordpress on a droplet hosted on Digitalocean. Now this entire website is done using Hugo and the results get (again) served by S3 and Cloudfront.